Reflections on Product Prioritization Strategies

Published:

Reflections on Product Prioritization Strategies

From an AI Product Management Course  A journey of understanding, evaluating, and gaining deeper awareness of what truly makes a difference in the world of products.

A journey of understanding, evaluating, and gaining deeper awareness of what truly makes a difference in the world of products.

Continuing our educational series focusing on Arabic language handling with large language models, in this part, we will explore how to fine-tune the Gemma2-9b model on an Arabic dataset using the Keras library, Keras_nlp, and LoRA technique. We will cover how to set up the environment, load the model, make necessary modifications, and train the model using model parallelism to distribute model parameters across multiple accelerators.

Continuing our educational series focusing on Arabic language handling with large language models, in this part, we will explore how to fine-tune the Gemma2-9b model on an Arabic dataset using the Keras library, Keras_nlp, and LoRA technique. We will cover how to set up the environment, load the model, make necessary modifications, and train the model using model parallelism to distribute model parameters across multiple accelerators.

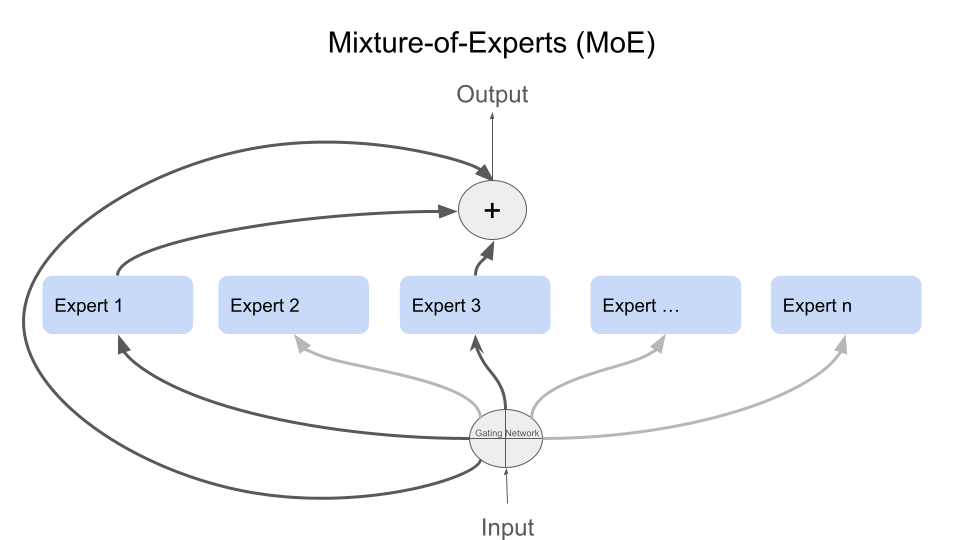

Image source [3]

Image source [3]